At Clearfeed, large language models (LLMs) are central to how we power AI-powered support workflows. From classifying messages to answering knowledge base queries, LLMs sit at the core of how we help teams manage support at scale.

Until now, these tasks ran on models from providers like OpenAI and Google, chosen after careful evaluation on our internal datasets. While this worked for most teams, many customers asked for more control. They wanted to keep data within their approved providers, optimize for cost or latency, and avoid being tied to a single default vendor.

That’s why we’re introducing Bring Your Own Model in ClearFeed. What does this mean for your team?

- Keep data within your approved set of LLM providers

- Optimize for cost, latency, or accuracy based on your own requirements

- Avoid being tied to a single default vendor

How it Works

With Bring Your Own Model, ClearFeed routes all LLM calls - message classification, agent responses, and knowledge indexing through the AI provider you configure. You only need to share the API key and base URL, and our team will enable it for your workspace.

1. Choose your provider

Clearfeed supports any vendor with an OpenAI-compatible API. This includes OpenAI, Amazon Bedrock, Anthropic, Sambanova Systems, and Google Gemini / Vertex AI.

2. Configure your embedding model

Embeddings are what Clearfeed uses to turn your knowledge base into a searchable format inside our vector database. With BYOM, you can choose which embedding model is used, this directly impacts the relevance of search results and the quality of answers generated from your knowledge base.

3. Map models to task tiers

Different support workflows need different levels of reasoning. To give you flexibility, ClearFeed organizes tasks into three tiers:

- Bronze → simpler tasks like message classification (maps to GPT-4.1-mini in OpenAI by default)

- Silver → regular tasks that need balanced, general purpose language model (maps to GPT-4.1 in OpenAI by default)

- Gold → difficult tasks that require advanced logical reasoning (maps to O3 in OpenAI by default)

You decide which model to run at each tier—balancing cost, latency, and accuracy based on your needs.

Wrapping Up

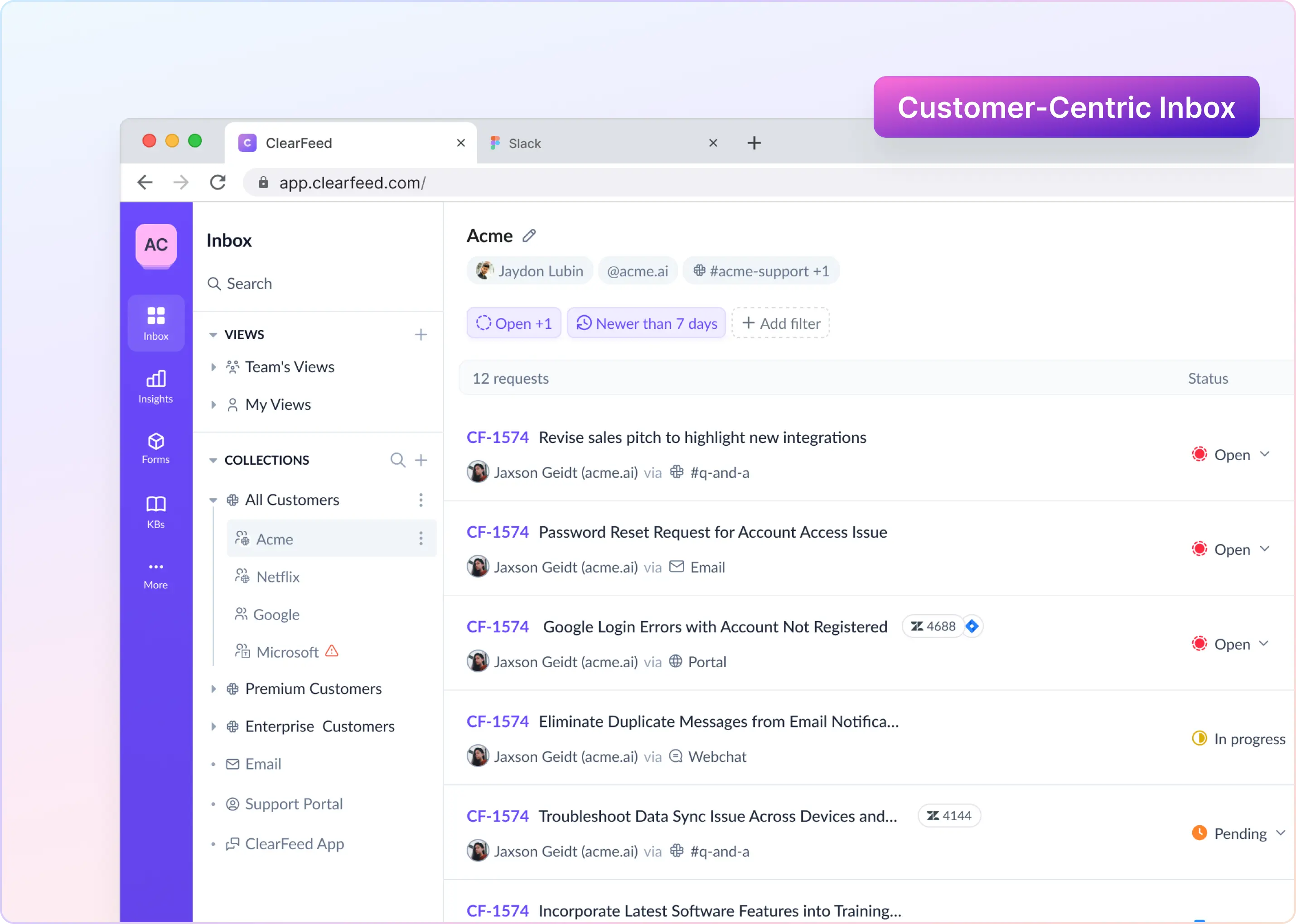

Bring Your Own Model gives Support teams the flexibility to choose which AI models are used inside Clearfeed. You stay in control of providers, models, and embeddings—while Clearfeed continues to power seamless support in Slack and Teams.

If you’d like to enable this for your organization, get in touch with our team on Slack or contact us at support@clearfeed.ai and we’ll set it up for you.